Ever wondered how facial recognition or object recognition works? How, in your favorite Marvel movie: Avengers, S.H.I.E.L.D. can recognize Loki just from the video feed of a CCTV camera? This has nothing to do with the CCTV camera, instead, itundefineds the Deep Learning model that allows them to identify him from any video feed. This is the power of Computer Vision.

Let us create a simple project to detect certain objects or people using our own Mac camera, or any other spare camera that you may have, using Amazon Web Services.

In this blog, you will see how to ingest audio/video feeds from any live recording camera into the AWS Kinesis Video Stream and apply various machine learning algorithms on the video stream to analyze these video feeds using Amazon SageMaker. Weundefinedll also use a few other services like Lambda, S3, EC2, IAM, ECS, CloudFormation, ECR, CloudWatch, etc. to power our model.

Amazon Kinesis Video Stream makes it easy to securely stream audio and video from the connected devices to AWS for analytics, machine learning, playback, and other processing.

Amazon SageMaker is the platform that helps you to build, train, and deploy ML models quickly and easily.

Now, let us learn how to identify the objects in video streams using the SageMaker in this step-by-step process.

Step 1: Create a Video Stream Using the Console :

- First of all, we need to create the Video Stream that will have the live stream data directly from the camera.

- Open the console at https://console.aws.amazon.com/kinesisvideo/home and choose Create the Video Stream to start.

- Enter the name of the video stream, leaving the default configuration as it is, and click Create Stream.

Step 2: Create an IAM user with CLI access and permissions to stream video to Kinesis Video Streams with the below permissions :

- In the second step, create one IAM user with CLI access to perform the command line operations, in order to stream video to Kinesis Video Streams with the below permissions.

- This user will be responsible for starting the live streaming.

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "kinesisvideo:DescribeStream",

"Resource": "<>"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "kinesisvideo:GetDataEndpoint",

"Resource": "<>"

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": "kinesisvideo:PutMedia",

"Resource": "<>"

}

]

Step 3: Kinesis Video Producer setup:

- To start streaming from any camera, we require the Kinesis video stream producer to produce the video stream from the camera and feed it to the AWS Kinesis Video Stream Console.

- Open a terminal and clone this Kinesis video producer using the below command.

- git clone https://github.com/awslabs/amazon-kinesis-video-streams-producer-sdk-cpp.git

- Run below commands to install libraries requires to run producer:

- For MAC: $ brew install pkg-config openssl cmake gstreamer gst-plugins-base gst-plugins-good gst-plugins-bad gst-plugins-ugly log4cplus gst-libav

- For Linux: $ sudo apt-get install libssl-dev libcurl4-openssl-dev liblog4cplus-dev libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev gstreamer1.0-plugins-base-apps gstreamer1.0-plugins-bad gstreamer1.0-plugins-good gstreamer1.0-plugins-ugly gstreamer1.0-tools

- Now create the Build directory in the producer sdk.

- mkdir -p amazon-kinesis-video-streams-producer-sdk-cpp/build

- cd amazon-kinesis-video-streams-producer-sdk-cpp/build

- In the build folder, fire cmake and make command as mentioned below:

- cmake .. -DBUILD_DEPENDENCIES=OFF -DBUILD_GSTREAMER_PLUGIN=ON

- make

- Set the environment variables of the build and lib folder:

- export GST_PLUGIN_PATH=`pwd`/build

- export LD_LIBRARY_PATH=`pwd`/open-source/local/lib

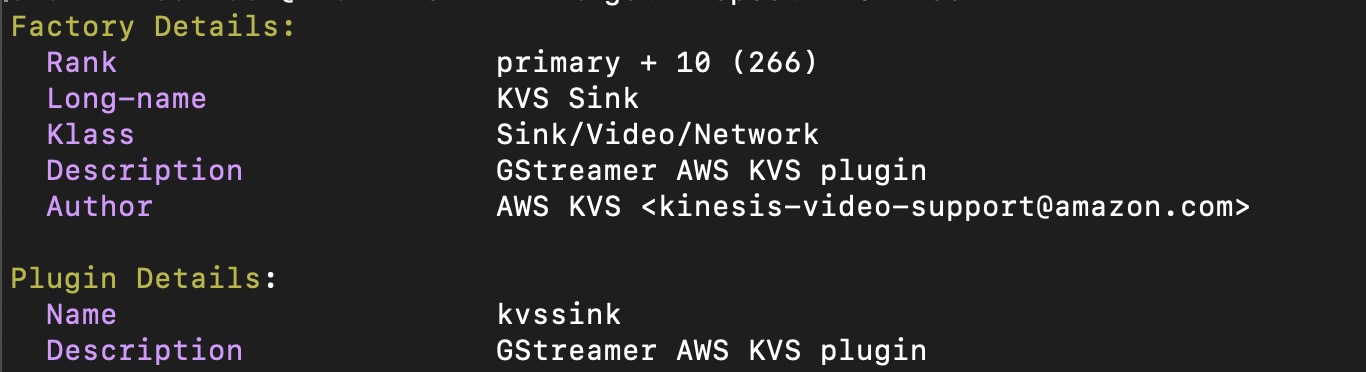

Now, when you execute the code: gst-inspect-1.0 kvssink youundefinedll get the information on the plugin such as below:

Step 4: Create an Amazon SageMaker inference endpoint:

- Now that our video stream has been all set up, we need to train our object detection model to identify the objects from the video stream data.

- Create a notebook instance, choose Open JupyterLab, And paste the object detection code from the below link and run the code: https://github.com/aws/amazon-sagemaker-examples/blob/af6667bd0be3c9cdec23fecda7f0be6d0e3fa3ea/introduction_to_amazon_algorithms/object_detection_pascalvoc_coco/object_detection_image_json_format.ipynb

- This step will train undefined test our model and upload it in the S3 bucket.

- Now go to the endpoint tab and create an endpoint that will help in the further step.

- SageMaker endpoint feeds the video stream into the SageMaker to perform our deployed algorithm on the stream data.

- AWS Fargate, an AWS ECS Cluster, will get the data from the video stream and feed it to the endpoint.

- AWS Fargate receives the media fragments from the streams using the Kinesis Video Streams. It then parses these media fragments to extract the H264 chunk, samples the frames that need decoding, decodes the I-frames, and converts them into image formats such as JPEG/PNG format before invoking the Amazon SageMaker endpoint.

Step 5: Create AWS CloudFormation Template to automate the whole process:

- The next step is to automate the whole process: from getting the video stream into AWS to getting the final predicted result using the AWS CloudFormation template.

- Use one of the below links to open the AWS CloudFormation console for your AWS Region. This link will launch the

correct stack corresponding to your region:

- Launch in Asia Pacific (Sydney) Region (ap-southeast-2)

- Launch in Asia Pacific (Tokyo) Region (ap-northeast-1)

- Launch in Europe (Frankfurt) Region (eu-central-1)

- Launch in Europe (Ireland) Region (eu-west-1)

- Launch in US East (N. Virginia) Region (us-east-1)

- Launch in US West (Oregon) Region (us-west-2)

- On the Create Stack page, provide the stack name, SageMaker endpoint name that we have created in the last step, and your Kinesis Video Stream name and click next.

- On the options page, keep the settings intact, checkbox the acknowledgment, and click next.

- The following resources are being created by the AWS CloudFormation template:

- An Amazon ECS cluster that uses the AWS Fargate compute engine.

- An Amazon DynamoDB table that maintains checkpoints and related state across worker that runs on Fargate.

- A Kinesis Data Stream that captures the inference outputs generated from SageMaker.

- An AWS Lambda Function for parsing the output from SageMaker.

- IAM resources for providing access across services.

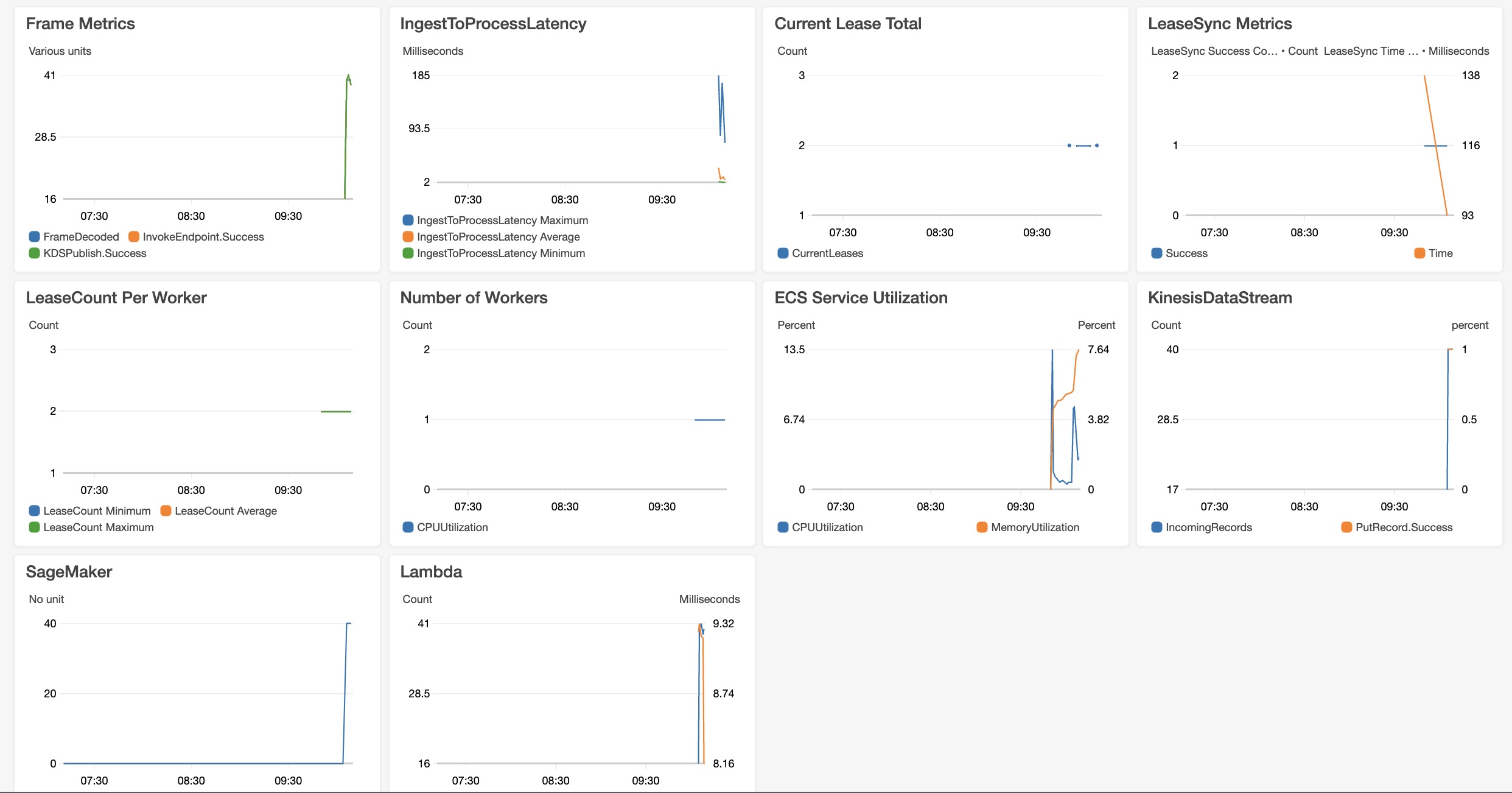

- AWS Cloudwatch resources for monitoring the application.

- Now everything has been set up, and we can start streaming. In your terminal, fire the below command to start streaming from your MacOS camera.

gst-launch-1.0 autovideosrc ! videoconvert ! video/x-raw,format=I420,width=640,height=480,framerate=30/1 ! vtenc_h264_hw allow-frame-reordering=FALSE realtime=TRUE max-keyframe-interval=45 bitrate=500 ! h264parse ! video/x-h264,stream-format=avc,alignment=au,profile=baseline ! kvssink stream-name=undefinedYOUR KINESIS VIDEO STREAM NAMEundefined storage-size=128 access-key=undefinedundefinedYOUR ACCESS KEYundefinedundefined secret-key=undefinedundefinedYOUR SECRET KEYundefinedundefined aws-region=undefinedundefinedYOUR REGIONundefinedundefined;

- This command will start the stream from your Mac camera, and you can check the same from the AWS Kinesis Video Stream console.

- To start the live stream for other cameras, you can check the various commands for different camera sources from the below link:

https://github.com/awslabs/amazon-kinesis-video-streams-producer-sdk-cpp/blob/master/docs/macos.md

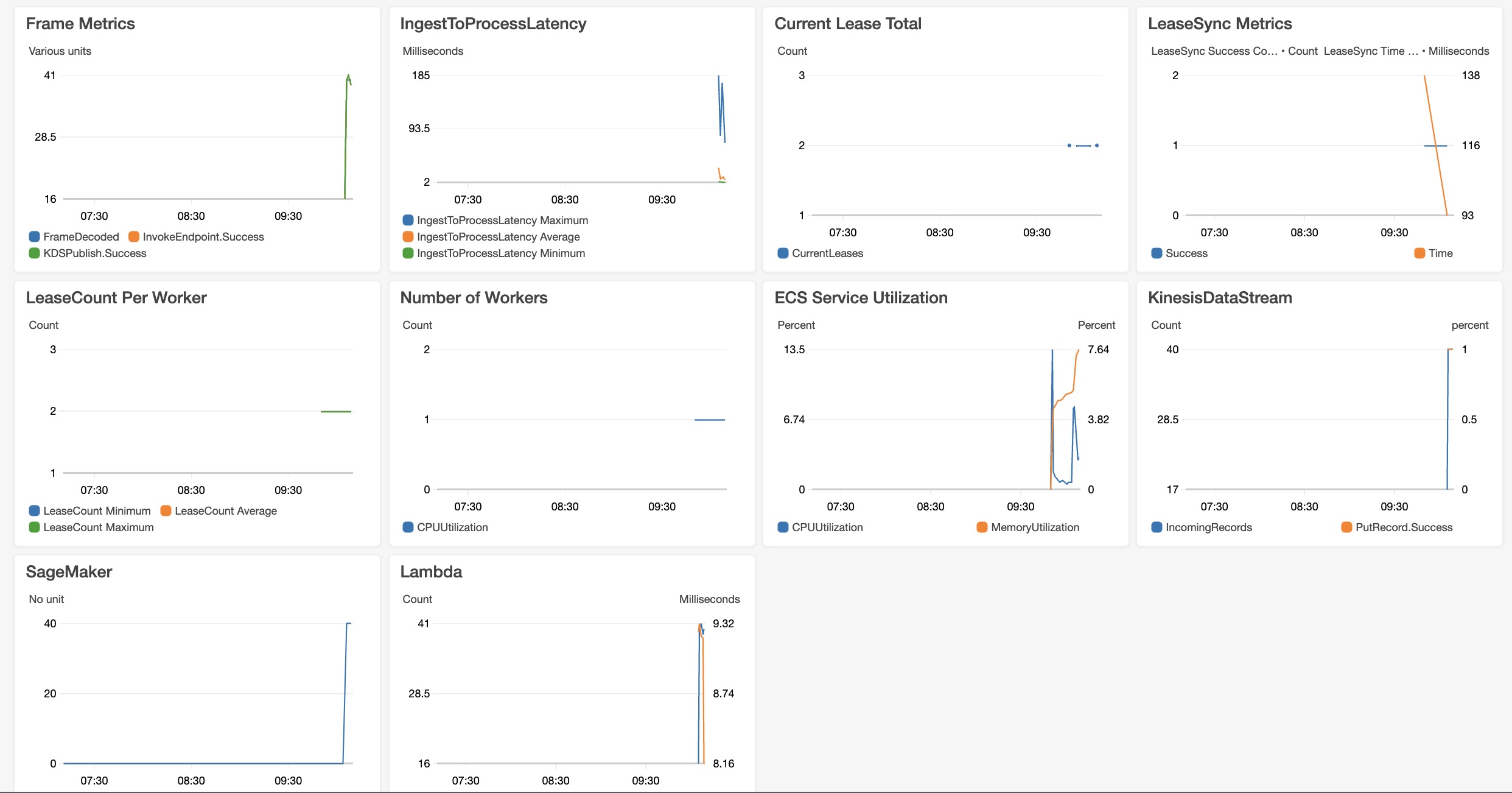

- Now the object detection is online and can be observed in the CloudWatch Logs.

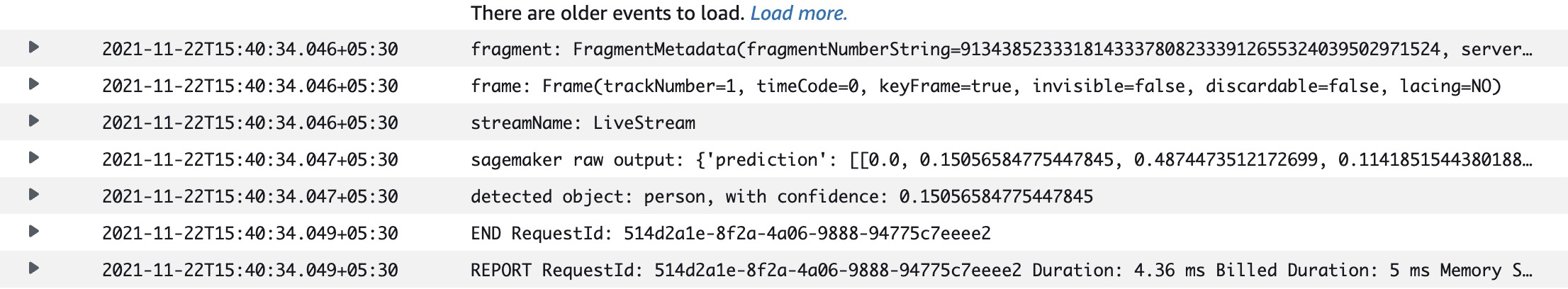

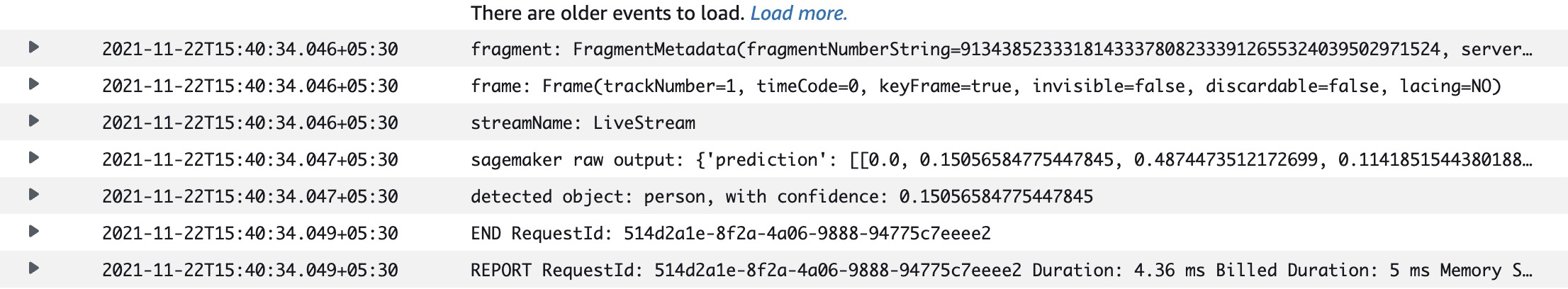

CloudWatch Logs:

- Go to CloudWatch and check the Dashboard for logs.

Lambda Function Logs:

- In CloudWatch, select the Log Groups and choose LambdaFunction logs to watch the prediction result.

- Here you will find the log for the detected object such as: Person, with confidence: 0.150..

Conclusion:

We have successfully detected an object/person from the stream. Now go creative and create your model maybe to track objects, vehicle detection, counting cars, and let us know your creative ideas. If youundefinedre stuck somewhere, feel free to reach out with your queries.

We, at Seaflux, are

Jay Mehta

Director of Engineering