A robust system with dynamic adaptability has become increasingly vital in this ever-evolving landscape of technology.In today's complex and fast-paced world, a more flexible system is required that can cope with the events as they happen, respond to them in real time, and allow adjustments. Introducing Event-Driven Architecture (EDA), a transformative concept that revolutionizes the way systems operate by focusing on the dynamic flow of events.

Imagine hosting a house party where everything is planned - when to serve drinks, when the dance will start, when to serve food, and so on. Now consider this as a flexible timeline where certain actions would be taken at the occurrence of certain specified triggers, called 'Events'. Serving drinks as soon as the guest enters the house party. Here, guests entering the house would be the trigger (Event) to take the action of serving the drink. Now, let us go technical into the understanding of Event Driven Architecture and see how to implement it using Kafka and Node JS.

Before we proceed with the implementation, let's verify that we meet the required prerequisites.

Node.js, founded on Chrome's V8 JavaScript engine, is a platform that thrives on asynchronous and event-driven principles. Its non-blocking I/O model makes it especially adept at constructing scalable network applications.

Apache Kafka forms a robust base for the development of event-driven architectures, and its array of advantages establishes it as the preferred option for handling real-time data.

Make sure you have Node.js installed. Create a new Node.js project and install the necessary packages:

npm init -y

npm install kafkajs

const { Kafka, Partitioners } = require('kafkajs');

const kafka = new Kafka({

clientId: 'iot-producer',

brokers: ['localhost:9092'],

});

const producer = kafka.producer({

createPartitioner: Partitioners.LegacyPartitioner,

});

const connectProducer = async () => {

try {

await producer.connect();

console.log('Producer Connected to Kafka!');

} catch (e) {

console.error(`Error while connecting to Kafka: ${e}`);

}

};

const produceTemperatureReading = async () => {

try {

const temperature = Math.floor(Math.random() * 50) + 1; // Simulate temperature readings

const deviceID = Math.floor(Math.random() * 10) + 1; // Simulate different IoT devices

await producer.send({

topic: 'temperatureData',

messages: [{ value: JSON.stringify({ deviceID, temperature }) }],

});

console.log(`Temperature reading sent successfully: For DeviceID ${deviceID}`);

} catch (e) {

console.error(`Error while producing temperature reading: ${e}`);

}

};

// Connect to Kafka initially

connectProducer();

// Schedule temperature reading production every second

setInterval(async () => {

await produceTemperatureReading();

}, 1000);

const { Kafka } = require('kafkajs');

const kafka = new Kafka({

clientId: 'iot-consumer',

brokers: ['localhost:9092'],

});

const consumer = kafka.consumer({ groupId: 'iot-group' });

const processTemperatureReading = async (message) => {

const { deviceID, temperature } = JSON.parse(message.value.toString());

console.log(`Received Temperature Reading: DeviceID ${deviceID} - ${temperature}°C`);

};

const consumeTemperatureReadings = async () => {

await consumer.connect();

console.log('Consumer Connected to Kafka!');

await consumer.subscribe({ topic: 'temperatureData', fromBeginning: true });

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

await processTemperatureReading(message);

},

});

};

consumeTemperatureReadings()

Open two terminal windows and run the producer and consumer:

Terminal 1 (Producer):

node Producer.js

Terminal 2 (Consumer):

node Consumer.js

You should see the following output indicating that the producer sends a message, and the consumer receives and processes it.

Output:

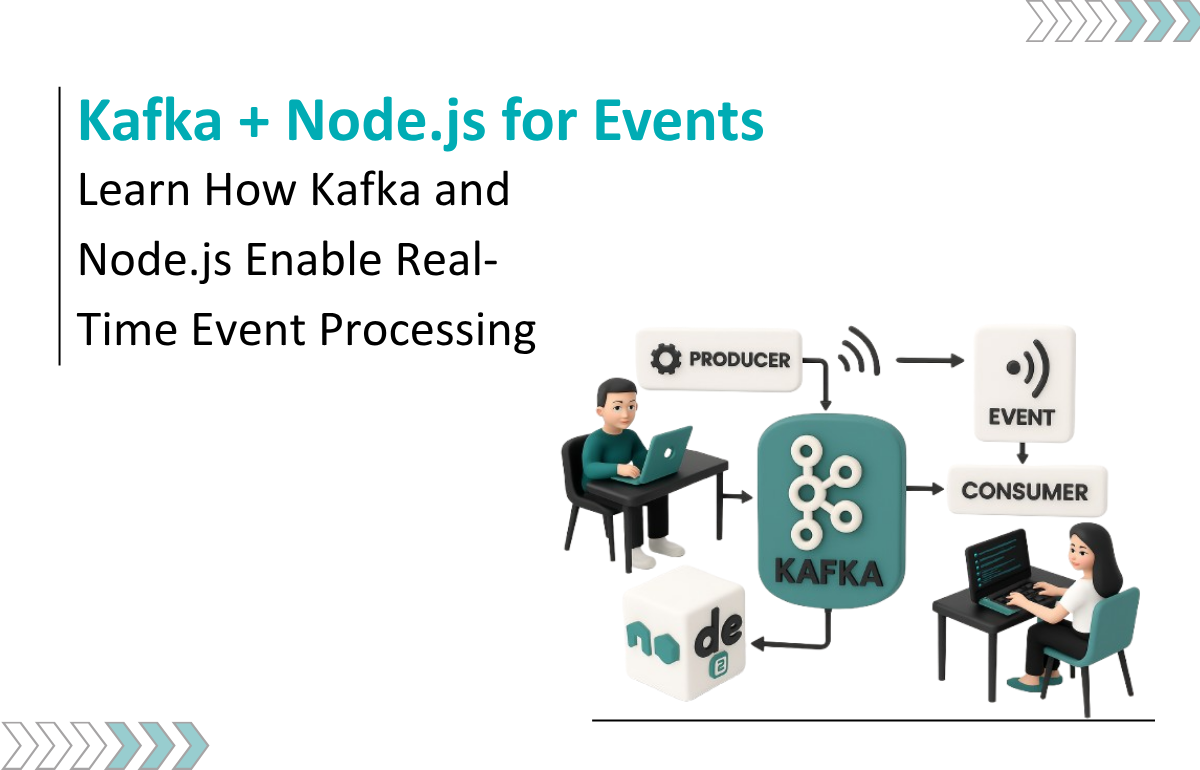

We conclude our exploration of Event-Driven Architecture, specifically leveraging the powerful combination of Kafka and Node.js. We explored what is event driven architecture, its key components, and benefits of event driven architecture. We also had our hands on experience in setting up the Kafka and developed a demo application to understand the event driven microservice.

We at Seaflux are your dedicated partners in the ever-evolving landscape of Cloud Computing. Whether you're contemplating a seamless cloud migration, exploring the possibilities of Kubernetes deployment, or harnessing the power of AWS serverless architecture, Seaflux is here to lead the way.

Technical Lead